We use cookies on this site to enhance your user experience

By clicking the Accept button, you agree to us doing so. More info on our cookie policy

We use cookies on this site to enhance your user experience

By clicking the Accept button, you agree to us doing so. More info on our cookie policy

Model Merging, Mixtures of Experts, and Towards Smaller LLMs

| [Home | Substack](https://substack.com/home/post/p-148217245) |

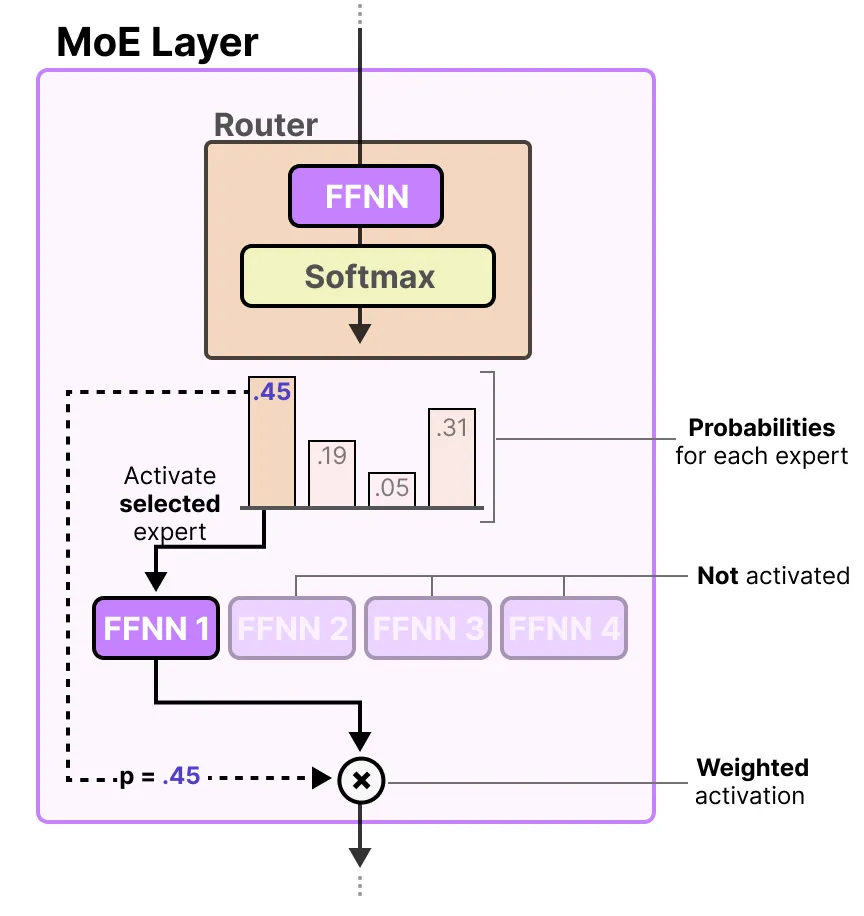

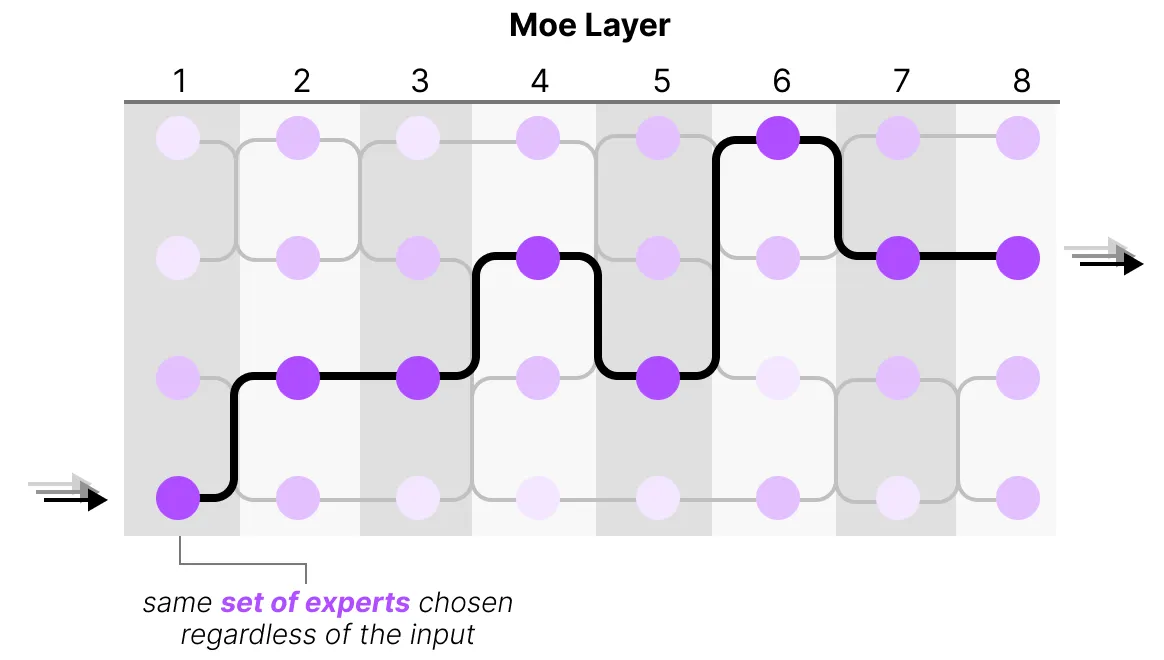

to prevent overfitting on the same experts

Latest Posts